#7 Advanced Prompting for LLMs ( that unlock reasoning ) 🧠

7 powerful Prompt engineering techniques : Least to most, Self-Ask, CoT , ToT , PaL , ReAct , Self-consistency .

Welcome to the episode 7 of AI Tribe 1-1-1 : A Biweekly newsletter designed to spark your interest in AI tools, concepts, applications, and research !

⚙️ Tool : PromptPerfect : Premier tool for prompt engineering !

PromptPerfect is a cutting-edge prompt optimizer designed to streamline prompt engineering for large language models (LLMs), large models (LMs) and LMOps. It features customizable settings, an intuitive interface, multi-goal optimization, and multi-language prompt.

Prompt engineering is the study of how the wording or structure of prompts can be optimized to improve LLM performance. But why exactly are prompting techniques necessary? Are the LLMs not smart enough to understand what you are expecting and then respond reasonably ?

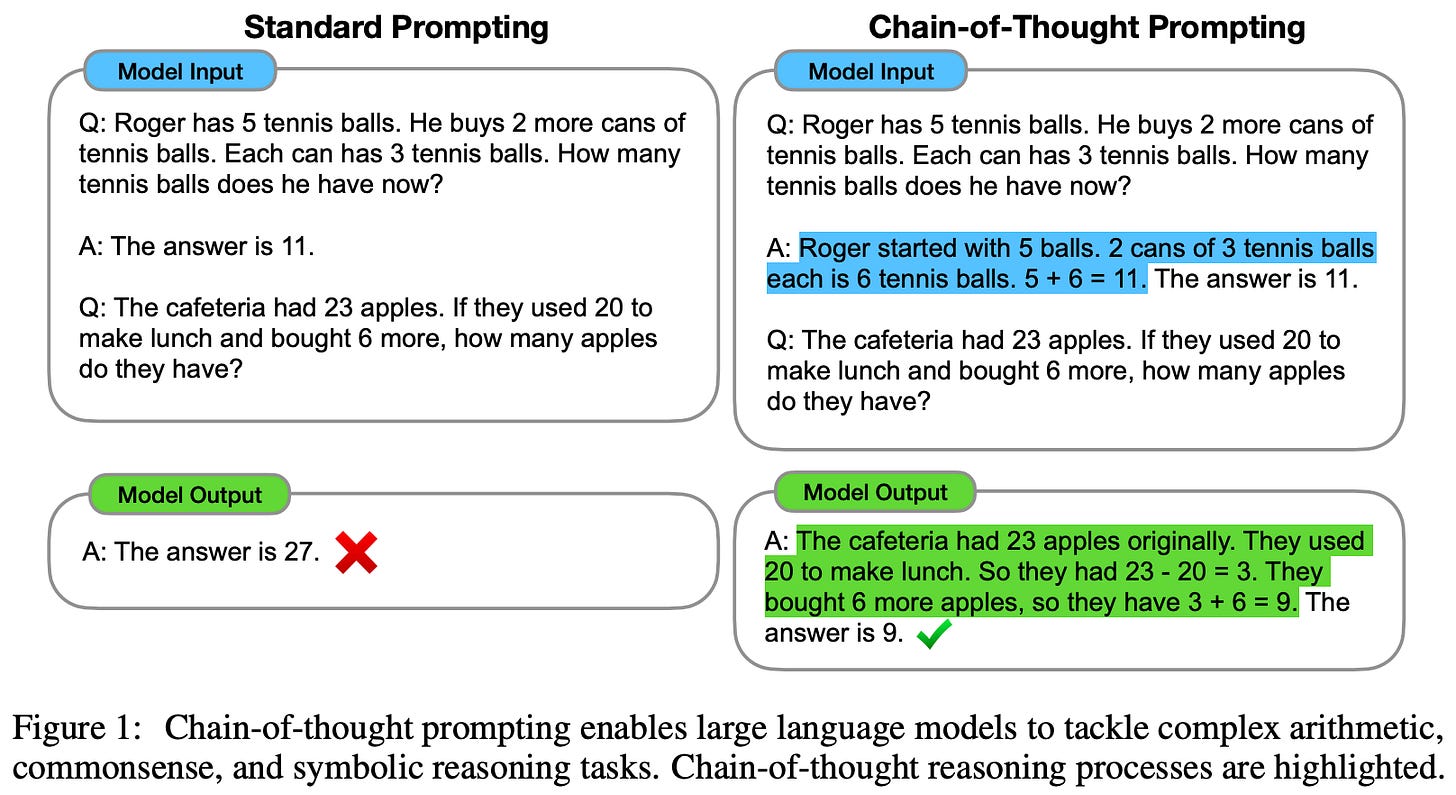

It turns out that LLMs as of today are not inherently capable of complex reasoning. Also, scaling up model size alone has not proved sufficient for achieving high performance on tasks such as arithmetic, common sense, and symbolic reasoning. Now you may think of task-specific fine-tuning, but that comes with the overhead of collecting more data and adapting model architecture. A simpler solution for us would be to use prompting techniques! Also, you might have heard about the recent ChatGPT code interpreter, which greatly enhances ChatGPT's abilities.

A prompting only approach is important because it does not require a large training dataset and a single model can perform many tasks without loss of generality.

😇 Today’s Recipe : 7 Powerful Prompting Techniques

The general idea behind most of these techniques is Few Shot Prompting : The idea is that instead of relying solely on what the LLM is trained on, it builds on the examples you provide. These examples help in context-based learning that requires some background knowledge.

Reminder : I have attached a working example at the end; After completing this guide you can try it on your end with other examples or techniques. It’s really so fun !

Chain Of Thought ( CoT ) : In this technique, we input a series of examples involving the reasoning steps . Now, the LLM can apply the reasoning steps for the given problem to provide output along with a detailed explanation for the output ( thought ).

Use Case : Arithmetic and commonsense reasoning.

Source: Wang et al. (2022) Self-Ask Prompting : Do you remember, I mentioned about asking follow up questions as a prompting technique in one of my previous post. When you combine this technique with CoT you get Self-ask prompting.

Source : Zhang et al. ( 2022 )

ReAct : The idea of ReAct is to combine reasoning and taking action. Reasoning enables the model to induce, track, and update action plans, while actions allow for gathering additional information from external sources. According to the study, ReAct overcomes issues of hallucination and error cascading of CoT reasoning by interacting with a knowledge source like Wikipedia.

Source : Yao et al. ( 2022) Symbolic Reasoning & PAL : Symbolic reasoning involves reasoning pertaining to counting and characteristics of various objects. The Program-Aided Language Model (PAL) method uses LLMs to read natural language problems and generate programs as reasoning steps. The code is then executed by an interpreter to produce the answer.

Source : Gao et al. ( 2022) Self-Consistency with CoT : The idea here is to generate multiple chains of thoughts and, then select the most consistent output for the final answer.

This obviously has increased overhead but can solve complex tasks.

Use cases : Complex Arithmetic and commonsense reasoning.

Source : Wang et al. ( 2023 ) Tree of Thought ( ToT ) : Here, the LLM's ability to generate and evaluate thoughts is combined with search algorithms (e.g., breadth-first search and depth-first search) to enable systematic exploration of thoughts with lookahead and backtracking.

Use case : Complex tasks that require exploration

Source : Yao et al. (2023) Least-to-most prompting : This goes beyond CoT prompting by first breaking down a problem into smaller sub-problems, then solving each of these sub-problems individually. As each sub-problem is solved, its answers are included in the prompt for solving the next sub-problem.

Use cases : Out-of-domain generalization, e.g., training NN on real photos of planes such that it will also be able to classify sketched pictures of a plane.

Source : Zhou et al. ( 2023) Okay, now that you know all the best prompting techniques, it’s time to get your hands dirty! The task is to determine if the customer is within the 30-day return window and answer his additional queries. So, I tried Least-to-most prompting on Google Bard with the following prompt and got the right answer!

CUSTOMER INQUIRY: I just bought a purse from your website on March 1st. I saw that it was on discount, so I bought a purse for $15, after getting 40% off on the original price. I saw that you have a new discount for purses at 60%. I'm wondering if I can return the purse and have enough store credit to buy 2 of your purses ?

INSTRUCTIONS: You are a customer service agent tasked with kindly responding to customer inquiries. Returns are allowed within 30 days. Today's date is March 29th. There is currently a 60% discount on all purses whose original prices range from $18-$100 . Do not make up any information about discount policies. The customer will receive a full refund for the buying price only, not the original price. What subproblems must be solved before answering this inquiry?

Now you know, prompting really can do many things. All you have to do is influence the model outputs to get whatever results we need !